Apple is rolling out a suite of accessibility-focused updates designed to make reading, browsing, and using its devices easier for a broad range of users. Ahead of Global Accessibility Awareness Day, Apple outlined new features and enhancements across its ecosystem, including Accessibility Nutrition Labels in the App Store, Magnifier for Mac, Braille Access, and Accessibility Reader. Additional updates are planned for Live Listen, visionOS, Personal Voice, and other tools. Apple is positioning these changes as part of a broader commitment to building technology that serves everyone, across devices and contexts.

Accessibility Nutrition Labels in the App Store

Apple’s Accessibility Nutrition Labels are designed to give users a clearer view of what accessibility features apps and games provide before download. These labels will highlight whether apps support features such as VoiceOver, Voice Control, Larger Text, and other options that can make the experience usable for people with a range of needs. The intent is to simplify the process of choosing apps by making the existence and quality of accessibility features immediately visible in the Store listing.

For users who rely on assistive technologies, these labels can function as a critical signal when evaluating apps and games. They provide a quick, at-a-glance sense of what tools are available and how an app might accommodate unique requirements. The labeling system is designed to be comprehensive enough to cover common accessibility features, giving users a more predictable understanding of how an app will function with assistive tech. By surfacing this information directly in the Store, Apple aims to reduce the trial-and-error approach many users experience when seeking apps that fit their needs.

From a product perspective, Accessibility Nutrition Labels also create a framework for developers. App creators can anticipate what features users expect and align their design and testing processes accordingly. This can lead to broader adoption of accessibility features across the App Store ecosystem, as developers recognize that clear labeling can influence discovery and adoption. The labels work in tandem with the broader accessibility tools Apple has introduced across platforms, reinforcing a cohesive narrative around inclusive design.

In practice, the labeling system can influence how users approach app selection on a daily basis. Users who require specific features can filter and compare options more efficiently, saving time and reducing frustration. For developers, the labels can drive a more deliberate integration of accessibility features into the core app design, prompting better onboarding, documentation, and support within app experiences. The end result is a more inclusive app landscape where users can feel confident in the tools available to them.

Apple emphasizes that accessibility is a core part of its product strategy, and the Nutrition Labels illustrate how accessibility considerations are embedded into the broader app discovery process. By providing transparent information about what accessibility features an app offers, Apple seeks to empower users to make informed choices and to encourage developers to invest in accessible design from the outset.

Magnifier for Mac and Desk View

Magnifier for Mac is a Mac-specific expansion of a familiar accessibility tool from iPhone and iPad. This feature connects to a user’s camera and enables precise zooming, enhancing the ability to read, recognize, and interact with the surrounding environment. The integration extends the concept of magnification beyond handheld devices, giving Mac users a portable, flexible way to magnify surroundings for improved clarity.

One notable capability is the option to connect an iPhone to a Mac, enabling focus on particular areas of interest with greater precision. This cross-device capability fosters a seamless workflow for users who rely on magnification for reading text, examining details, or performing tasks with enhanced visual clarity. The solution is designed to be intuitive, building on existing familiarity with Magnifier while expanding its reach to desktop contexts.

Desk View is introduced as a complementary mode within Magnifier, specifically engineered to assist users who need to read documents or view content on a desk surface. This mode can provide a practical reading experience by presenting documents, pages, or notes in a way that accommodates viewing angles, lighting, and other real-world considerations. The Desk View integration adds versatility to how users engage with printed material, schematics, or handwritten notes, improving accessibility in educational, professional, and personal settings.

For users, the Magnifier and Desk View combination represents a meaningful enhancement to daily tasks. It offers a tangible way to access information that might otherwise be challenging to read, thereby reducing barriers to participation in work, study, and everyday activities. For developers and content creators, the emphasis on accessibility through magnification underscores the importance of legibility and visual design, encouraging clearer typography and layouts that scale well across magnification levels.

The Magnifier for Mac initiative aligns with Apple’s broader accessibility strategy by leveraging hardware capabilities (such as cameras) and software integration to deliver practical, user-friendly solutions. The goal is to augment the Mac experience with tools that are familiar, reliable, and adaptable to different contexts, from reading menus and documents to inspecting details in complex visuals.

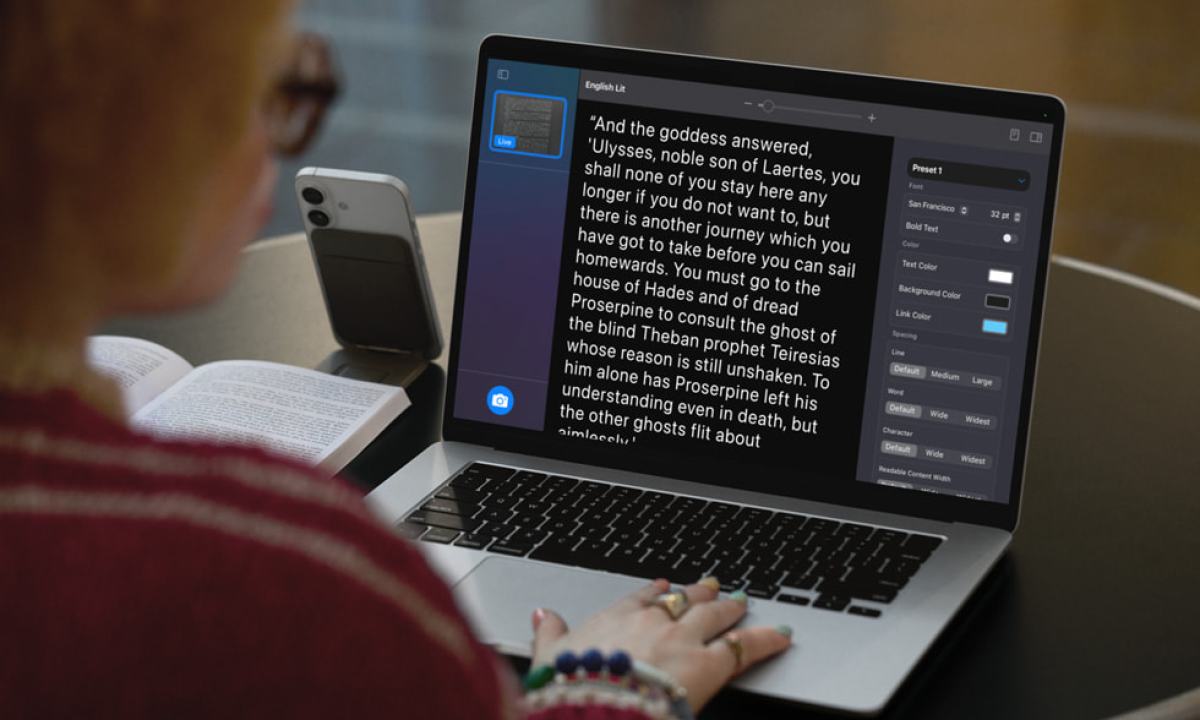

Accessibility Reader Across Apple Platforms

Accessibility Reader is described as a cross-platform tool designed to assist users with a wide range of disabilities, including low vision and dyslexia. The feature supports extensive text customization, enabling adjustments to font, color, spacing, and other typographic properties. This level of control helps users tailor on-screen text to their preferences, improving readability and comprehension.

A key advantage of Accessibility Reader is its universality: it can be launched from any app, making it a flexible and readily accessible option regardless of the user’s current task. Moreover, the feature is built into the Magnifier app across iOS, iPadOS, and macOS, ensuring continuity of experience as users move between devices or contexts. The cross-platform availability reinforces a consistent approach to accessibility that users can count on in daily life.

By enabling targeted focus on specific sections of text, Accessibility Reader can facilitate more efficient reading, study, and information processing. This is particularly valuable for users with cognitive or perceptual differences who benefit from controlled pacing and emphasis. The ability to customize typography and layout supports more comfortable and inclusive engagement with digital content, from emails and articles to e-books and workplace documents.

From an ecosystem perspective, Accessibility Reader strengthens the interconnected nature of Apple’s accessibility features. It complements magnification, high-contrast options, and text-to-speech tools, creating a layered suite of options that users can combine to meet their individual needs. For developers, this feature underscores the importance of designing content with legibility in mind, encouraging better contrast, spacing, and readability in app interfaces.

In practical terms, Accessibility Reader is positioned as a universal, on-demand assistive technology embedded in the platform experience. It can be invoked quickly, supports customization, and remains consistent across devices, which can reduce the learning curve for new users and deepen confidence in using Apple devices for long-form reading and content consumption.

Live Captions on Apple Watch and Hearing Health Support

The accessibility initiative also includes a new Live Captions feature on Apple Watch, aimed at helping users follow conversations in real time. By delivering captions for spoken dialogue, the feature assists users who are hard of hearing or have other hearing-related challenges. This capability can be experienced through a paired iPhone or leveraging hearing health features available on AirPods Pro 2, creating a cohesive approach to accessible communication.

Live Captions on Apple Watch expands the reach of real-time transcription beyond iPhone and iPad, bringing captioning capabilities to a wearable device. The integration with AirPods Pro 2’s hearing health features suggests a holistic approach to supporting users during daily interactions, whether in conversations, meetings, or casual social settings. This extension reflects Apple’s strategy of embedding accessibility tools directly into wearable and audio experiences, making inclusive technology more pervasive.

Tim Cook, Apple’s CEO, highlighted the company’s commitment to accessibility in a straightforward, values-driven message. “At Apple, accessibility is part of our DNA,” he stated. “Making technology for everyone is a priority for all of us, and we’re proud of the innovations we’re sharing this year. That includes tools to help people access crucial information, explore the world around them, and do what they love.” The quote reinforces Apple’s intent to integrate accessibility into its product philosophy and everyday use.

The Live Captions capability, especially when paired with iPhone and AirPods Pro 2, signals a broader trend toward seamless, multimodal accessibility. Users can expect captions to appear in real time, supporting clearer communication in a variety of contexts—from one-on-one conversations to group discussions in public or work environments. The combination of hardware and software enhancements demonstrates how Apple aims to remove barriers by integrating assistive features across devices and peripherals.

Other Updates and Ecosystem Context: Live Listen, VisionOS, Personal Voice, and More

In addition to the flagship features described above, Apple is signaling ongoing updates to Live Listen, visionOS, Personal Voice, and other components of its accessibility ecosystem. While specific technical details are not exhaustively described in the initial announcement, the emphasis is clear: Apple intends to broaden how users interact with sound, visuals, and personalized communication across its platforms.

Live Listen, historically a capability that leverages AirPods and iPhone to funnel ambient audio to a user, is positioned as receiving enhancements that could improve hearing experiences in everyday environments. VisionOS—a wearable, spatial computing platform—points to a broader strategy for inclusive interfaces that extend beyond traditional screens, enabling accessible interactions in augmented or mixed-reality contexts. Personal Voice, a tool designed to assist users in communication, is highlighted as part of the suite of updates aimed at expanding how individuals express themselves and participate in conversations.

The overarching implication is that Apple seeks to create a more inclusive, multimodal experience across devices—phones, tablets, wearables, and beyond. This approach aligns with broader trends in accessibility that emphasize intuitive control schemes, real-time feedback, and adaptive interfaces responsive to diverse sensory needs. Users can anticipate that these updates will gradually roll out or integrate into existing software updates, with attention paid to interoperability and user education so that people can take full advantage of the new tools.

From a user perspective, these updates promise to streamline how accessibility features are discovered, configured, and utilized in everyday life. Whether it’s more informative app listings, enhanced magnification and desk-based reading, cross-platform text customization, real-time captions, or broader hearing and vision assistance tools, the goal is to reduce friction and enable participation in a wide range of activities. For developers and designers, the updates underscore the importance of inclusive design, the value of accessible content and interactions, and the potential for reach to a wider audience through built-in accessibility capabilities.

Corporate Commitment and the Global Context

Apple’s announcements are framed around Global Accessibility Awareness Day, underscoring the company’s intent to position accessibility as a central, year-round priority rather than a peripheral feature. The emphasis on tools that help people access crucial information, explore the world, and do what they love suggests a holistic view of technology as a democratic medium—one that empowers people regardless of physical abilities or constraints.

From a broader industry perspective, Apple’s approach could influence how other technology companies think about accessibility in product design, marketing, and developer ecosystems. By providing concrete labeling in the App Store, cross-device accessibility tools, and real-time supportive features, Apple sets a precedent for integrating accessibility into core product narratives and user expectations. If successful, such strategies may encourage more developers to invest in accessible design, knowing that users actively seek and value transparent information about an app’s accessibility features.

Tim Cook’s comments reinforce the company’s stance that accessibility is not a niche concern but a central element of product strategy. The explicit language about accessibility as “part of our DNA” signals a long-term commitment to evolving solutions that work across contexts, languages, and communities. This kind of public commitment can build consumer trust and set a tone for future announcements and product iterations.

Global Accessibility Awareness Day serves as a backdrop for these initiatives, highlighting the importance of accessibility beyond Apple’s immediate user base. The efforts reflect a convergence of technology, design, and social responsibility—an emphasis on making digital tools usable and valuable for as many people as possible. For users and advocacy groups, Apple’s updates may herald broader opportunities for participation, education, and empowerment through technology.

Implications for Users, Developers, and the Ecosystem

-

For users: The new Accessibility Nutrition Labels can streamline app discovery for assistive tech users, helping them quickly identify apps that meet their needs. Magnifier for Mac and Desk View expand practical ways to access text and information in real-world settings, while Accessibility Reader offers customizable reading experiences across platforms. Live Captions on Apple Watch and integration with AirPods Pro 2 strengthen real-time communication support, supporting conversations in diverse environments.

-

For developers: There is a clear signal to incorporate accessible features early in the design process and to document those features in a standardized way. The Accessibility Nutrition Labels create a new discovery channel where apps with robust accessibility support can stand out. The cross-platform nature of Accessibility Reader and the broader ecosystem updates may encourage broader adoption of accessible typography, color contrasts, and layout choices that work well under magnification or in captioned contexts.

-

For the broader ecosystem: A unified, accessible experience across devices—iPhone, iPad, Mac, Apple Watch, and AirPods—fosters a cohesive user journey. The emphasis on real-time captions, enhanced magnification, and adjustable reading experiences aligns with an increasingly diverse user base that relies on adaptive technologies. These efforts may also influence future hardware and software integrations designed to support accessibility in new form factors, including augmented reality and spatial computing contexts.

-

For accessibility advocacy: Apple’s framing around Global Accessibility Awareness Day helps amplify ongoing conversations about inclusive technology. The company’s public commitment, coupled with practical tool releases, can stimulate dialogue, research, and collaboration among accessibility professionals, developers, and users who rely on assistive technologies for daily activities.

Conclusion

Apple’s latest announcements mark a broad and substantive push to embed accessibility into the daily fabric of its platforms and products. With Accessibility Nutrition Labels in the App Store, Magnifier for Mac with Desk View, Accessibility Reader across iOS, iPadOS, and macOS, and Live Captions on Apple Watch alongside hearing health features in AirPods Pro 2, the company is delivering a multilayered approach to inclusive technology. Updates to Live Listen, visionOS, Personal Voice, and related tools further underscore Apple’s intent to expand how people interact with sound, text, and visuals in ways that accommodate diverse needs and preferences.

The company frames these changes as part of a deep-seated commitment to accessibility, reinforced by Tim Cook’s assertion that accessibility is “part of our DNA.” By prioritizing clearer app discovery, cross-device assistive features, and real-time communication support, Apple aims to empower users to access crucial information, explore the world around them, and do what they love—without unnecessary barriers. As these tools roll out and mature, they have the potential to shape user expectations, influence app design standards, and expand the practical reach of accessible technology across everyday life.